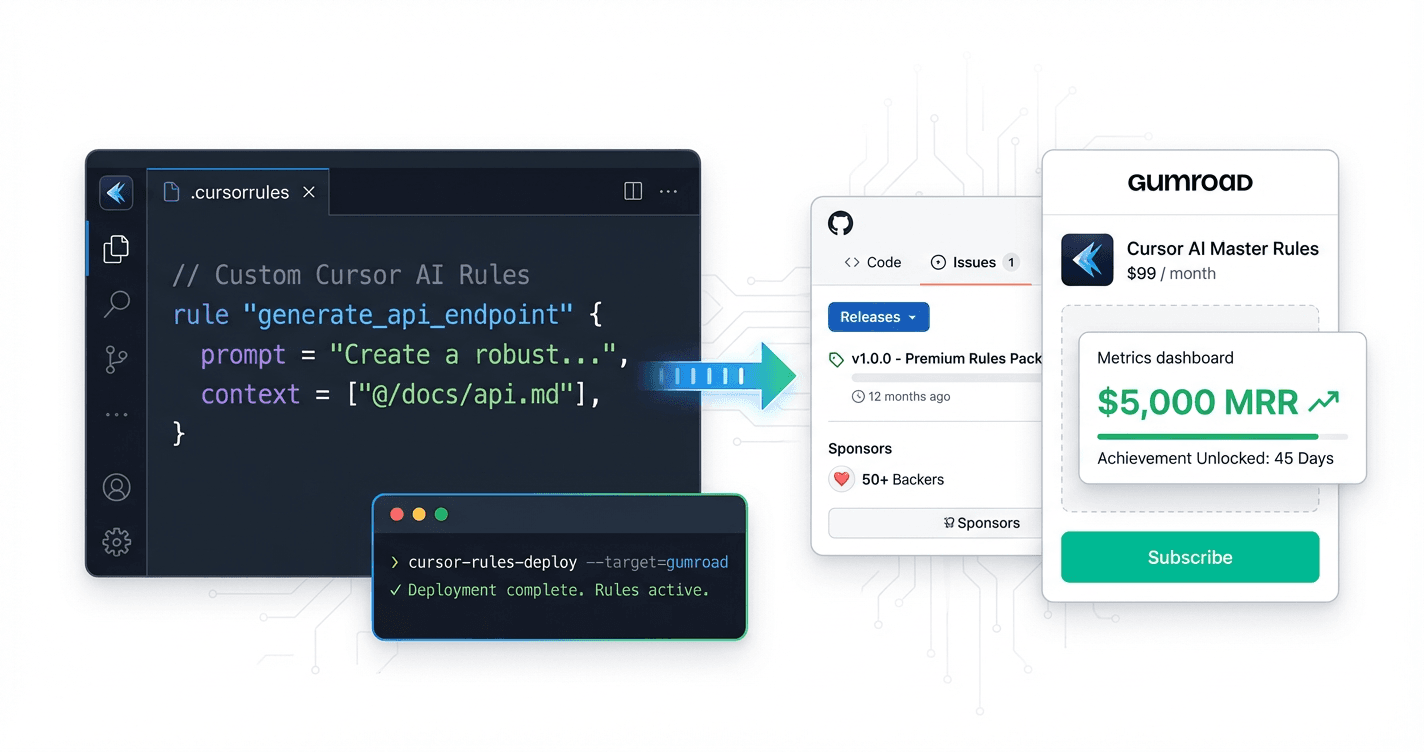

Launch Your Paid Cursor AI Rules Business: From Custom Prompts to $5K MRR in 45 Days

Build and launch a paid Cursor AI rules and prompt-library product in 45 days using a validation-first, engineer-friendly system. You'll identify high-friction coding workflows, package production-ready `.cursorrules` and prompt templates, ship a Gumroad offer with team licensing, and execute a targeted distribution plan to reach your first 100-200 customers at $29-$99 per pack.

You've already built the Cursor AI rules that 10x your own productivity--and your teammates keep asking you to share them. What if, instead of giving away your best prompts for free, you packaged them into a subscription product that generates $5K monthly while you sleep? This course shows you the exact 45-day system to turn your existing AI coding expertise into recurring revenue, without building complex software or needing a massive audience first.

What Students Say

Hear from learners who have completed this course:

Tomás R.

Staff Backend Engineer (FinTech)

I went in thinking “I’ll just sell some prompts,” but Section 2 (Workflow Mining and Demand Proof) completely changed my approach. I used the friction-log template from that section to capture repeated pain points in our Node/TypeScript code reviews (naming consistency, missing edge-case tests, and sloppy API error handling). Then I followed the “demand proof without an audience” playbook—posted a tiny GitHub gist + asked 10 targeted questions in Cursor communities—and got 27 signups for a waitlist in 5 days. The biggest unlock was Section 3’s rules architecture: I split my pack into modular `.cursorrules` (linting style, testing heuristics, and PR review prompts) and added a quality bar checklist so outputs weren’t “AI vibes.” After shipping, I priced it exactly how Section 5 suggested (freemium sample + paid tiers + team licensing). Result: 143 customers in the first month, ~$3.2K revenue, and two small teams purchased licenses instead of individual copies. Bonus: I now use the same rules internally—our PR back-and-forth dropped noticeably because the prompts force better acceptance criteria up front.

Read more

Amina E.

Developer Advocate & Technical Content Lead (SaaS)

I’ve launched plenty of content, but I’d never packaged an engineer-facing product. Section 4 (Packaging, Documentation, and Customer-Ready Delivery) was the missing piece: the “README that sells + installs” structure and the folder conventions for prompt libraries made the product feel real instead of like a dump of text files. Section 6 was also surprisingly tactical—especially the Gumroad page structure (hero promise, what’s inside, proof, FAQ, and license terms) and the conversion assets checklist. I implemented the analytics setup exactly as taught and could see where people dropped off. After adjusting the page copy and adding the “team licensing” callout from Section 5, my conversion rate went from ~2.1% to 4.6% over two weeks. Concrete outcome: my Cursor rules pack for “API documentation + SDK snippets” hit $1,780 in the first 21 days, and more importantly, it became a portfolio artifact that helped me negotiate a title bump to Lead DevRel. The course kept me from overbuilding and gave me a repeatable launch template I’ll reuse.

Read more

Adaeze O.

Freelance QA Automation Engineer (Cypress/Playwright)

What I liked is that it’s not “build for 45 days and pray.” Section 1’s validation-first criteria and the 45-day calendar forced me to pick a narrow, high-friction workflow. I chose flaky E2E tests and test debugging because it’s what clients constantly pay me to fix. Section 3 helped me engineer the actual product: I created `.cursorrules` that enforce stable selectors, require Arrange-Act-Assert in tests, and generate failure triage prompts (log parsing, reproduction steps, and suggested fixes). I also used the quality bar rubric to prevent the templates from producing pseudo-code that doesn’t compile. Then Section 7’s distribution system was the game-changer for someone without a big audience. I followed the “repeatable launch loops” approach: weekly GitHub examples + short Twitter/X threads showing before/after debugging sessions + posting to Cursor communities. Within 6 weeks I hit 118 customers at $39, and two agencies bought the team license. The unexpected benefit: my client delivery improved—my own debugging time on flaky tests dropped by about 30–40% because I was using the same triage prompts I sold.

Read more

Course Overview

Build and launch a paid Cursor AI rules and prompt-library product in 45 days using a validation-first, engineer-friendly system. You'll identify high-friction coding workflows, package production-ready `.cursorrules` and prompt templates, ship a Gumroad offer with team licensing, and execute a targeted distribution plan to reach your first 100-200 customers at $29-$99 per pack.

Section 1: The 45-Day Plan and Validation-First Product Criteria

Define the exact constraints, milestones, and success metrics for a 45-day launch so you don't drift into "endless polishing." You'll set up a simple scoring model to pick a market where rules libraries create measurable time savings and where buyers already pay for developer productivity.

Learning Outcomes:

- Translate the 45-day goal (first 100-200 customers) into weekly deliverables, time blocks, and leading indicators.

- Apply a validation scorecard (pain intensity, repeat frequency, buyer density, willingness to pay, differentiation) to shortlist 3-5 candidate packs.

- Define a narrow ICP and "job story" for a pack (framework + workflow + output) that avoids generic prompt bundles.

Most developers fail at selling software products not because they can't code, but because they treat product launches like hobby projects rather than engineering problems.

You have likely spent hours optimizing your .cursorrules file or fine-tuning a system prompt to shave 30 seconds off a repetitive task. You understand leverage. Yet, when it comes to monetization, you likely fall into the "Build Trap": spending three months polishing a massive library of prompts, only to launch to silence because nobody actually has the problem you solved.

In this section, we are replacing the "build then pray" approach with a constrained, validation-first architecture. We will treat your business launch like a software sprint: strict time-boxing, defined deliverables, and pass/fail integration tests before deployment.

The 45-Day Launch Architecture

To hit the goal of $3K-$10K MRR, we must invert the typical process. Instead of starting with the product (the prompt library), we start with the constraint.

We are setting a hard 45-day deadline to go from zero to your first 100 paying customers. Why 45 days? Because it forces you to focus on the "Minimum Viable Solution." If it takes longer than 45 days to build the initial version, you are over-engineering.

Here is the sprint schedule we will follow. This assumes you are working 10-15 hours a week (evenings and weekends) while maintaining your full-time role.

| Phase | Timeline | Primary Objective | Deliverable |

|---|---|---|---|

| 1. Discovery | Days 1-7 | Identify high-friction developer workflows. | A shortlist of 3 distinct "Job Stories." |

| 2. Validation | Days 8-14 | Confirm willingness to pay (WTP). | 50+ waitlist signups or 5-10 pre-sales. |

| 3. Build | Days 15-30 | Create the core .cursorrules and prompt pack. | A functional, tested "Alpha" version. |

| 4. Packaging | Days 31-37 | Setup distribution (Gumroad/Lemon Squeezy). | Product page and documentation. |

| 5. Deployment | Days 38-45 | Execute the launch sequence. | First 100 customers acquired. |

Key Insight: Most developers spend 90% of their time in Phase 3 (Building). In this course, we allocate only 33% of the time to building. The value is created in the selection of the problem (Discovery) and the verification of the market (Validation).

The "Validation-First" Scorecard

You are not building "AI prompts." You are selling developer velocity. To ensure you pick a market where velocity is worth paying for, you will score your ideas against five variables.

Do not rely on intuition. Run your potential ideas through this scorecard. Rate each variable from 1 (Low) to 5 (High).

- Pain Intensity: How frustrating is the manual workflow? (e.g., configuring Webpack from scratch is a 5; writing a simple for-loop is a 1).

- Repeat Frequency: How often does the developer face this problem? (Daily is a 5; once a year is a 1).

- Buyer Density: Are there easily identifiable communities where these people hang out? (e.g., The Supabase Discord is a 5; "General web developers" is a 1).

- Willingness to Pay (WTP): Does this audience already pay for tools? (Senior devs/Freelancers are a 5; CS students are a 1).

- Differentiation Potential: Can this be easily copied by a generic ChatGPT prompt? (Complex framework migrations are a 5; "Write me a blog post" is a 1).

Passing Score: A viable product idea must score at least 20/25.

Example Calculation:

- Idea A: Generic "Code Helper" Prompts.

- Pain: 2, Frequency: 5, Density: 1, WTP: 1, Diff: 1. Total: 10 (FAIL)

- Idea B: Next.js 14 App Router Migration & Best Practices Rules.

- Pain: 5, Frequency: 3, Density: 5, WTP: 4, Diff: 4. Total: 21 (PASS)

Pro Tip: Focus on "Migration" and "Configuration" pains. These are high-stakes, high-complexity moments where developers are terrified of breaking things. A set of

.cursorrulesthat guarantees a clean migration is infinitely more valuable than a generic "code explainer."

Defining the ICP and "Job Story"

One of the biggest mistakes technical founders make is defining their Ideal Customer Profile (ICP) too broadly. "React Developers" is not a niche. It is a census category.

To sell effectively, you need to define a Job Story. This framework replaces user personas with workflow context. It follows this syntax:

"When [Situation], I want to [Action], so that I can [Outcome]."

Let's look at the difference between a weak definition and a strong, saleable definition.

The Weak Definition (Generic):

- Target: JavaScript Developers.

- Product: A bundle of 500 prompts for coding.

- Result: You compete with free tools. $0 revenue.

The Strong Definition (Specific):

- Target: Solo-founder/Freelance Full-stack devs using Supabase & Next.js.

- Situation: When I am setting up a new SaaS project and need to implement Row Level Security (RLS) policies...

- Action: I want to use a verified

.cursorrulesfile that auto-generates secure policies based on my schema... - Outcome: So that I don't accidentally expose user data and can ship the MVP this weekend.

Notice the difference? The second example sells security and speed. It solves a specific, terrifying problem. That is something a senior developer will happily pay $50 for to avoid three hours of documentation reading.

Important: Your product is not the prompts themselves. Your product is the assurance that the code generated by the prompts follows strict architectural patterns that the buyer cares about.

Leading Indicators vs. Vanity Metrics

As we move through the 45-day plan, we need metrics to verify we are on track. Avoid vanity metrics like "Twitter Impressions" or "LinkedIn Likes." These correlate poorly with purchase intent.

Instead, track these Leading Indicators:

- Conversation Rate: In the Discovery phase, how many developers reply to your DM/comment when you ask about their workflow pain? (Benchmark: >20%)

- Waitlist Conversion: When you put up a simple landing page describing the solution, what % of visitors drop their email? (Benchmark: >15%)

- Beta Uptake: If you offer a "stripped down" free version of one rule, how many people download it and actually execute it?

If you hit Day 14 and have zero email signups, do not proceed to build. You have failed the validation test. Loop back to Discovery. This saves you 30 days of wasted coding.

Summary Checklist for Section 1

Before moving to the next section, ensure you have:

- Committed to the 45-day timeline and blocked out 10 hours/week on your calendar.

- Adopted the "Validation-First" mindset: No code is written until demand is verified.

- Drafted 3 potential "Job Stories" based on your own recent coding struggles.

- Understood that you are targeting experienced devs who value time over money, not beginners looking for shortcuts.

Coming Up Next: Now that we have our constraints and scoring model, we need to find the raw data to fill it. In Section 2: Workflow Audit and Opportunity Spotting, we will perform a "forensic audit" of your own coding history and GitHub repositories to extract highly profitable product ideas you didn't even know you had.

Section 2: Workflow Mining and Demand Proof (Without an Audience)

Extract real, high-value prompt and rules opportunities from developer behavior rather than guessing. You'll run fast demand tests using lightweight interviews, GitHub/issue mining, and channel-specific outreach to confirm people will pay before you build.

Learning Outcomes:

- Conduct 10-15 workflow interviews using a structured script to identify repeatable prompt sequences worth packaging.

- Mine GitHub repos, issues, and discussion boards to find recurring "AI friction" patterns (refactors, tests, migrations, bug triage, code review).

- Validate pricing and positioning with a pre-sell or waitlist experiment (with acceptance criteria and go/no-go thresholds).

Most developers fail at productization because they start with the solution. You write a brilliant .cursorrules file for your own workflow, assume everyone else needs it, build a landing page, and then hear crickets.

The "Field of Dreams" approach--if you build it, they will come--is a fatal error in the developer tools market. Your peers are sophisticated, skeptical, and drowning in free tools. To get them to open their wallets, you cannot guess at their pain; you must mathematically prove it exists before you write a single line of production prompt code.

This section covers Workflow Mining. This is a systematic process of extracting high-value friction points from developer behavior. We are moving from "I think this is useful" to "I have evidence that 50 developers are currently struggling with this specific migration."

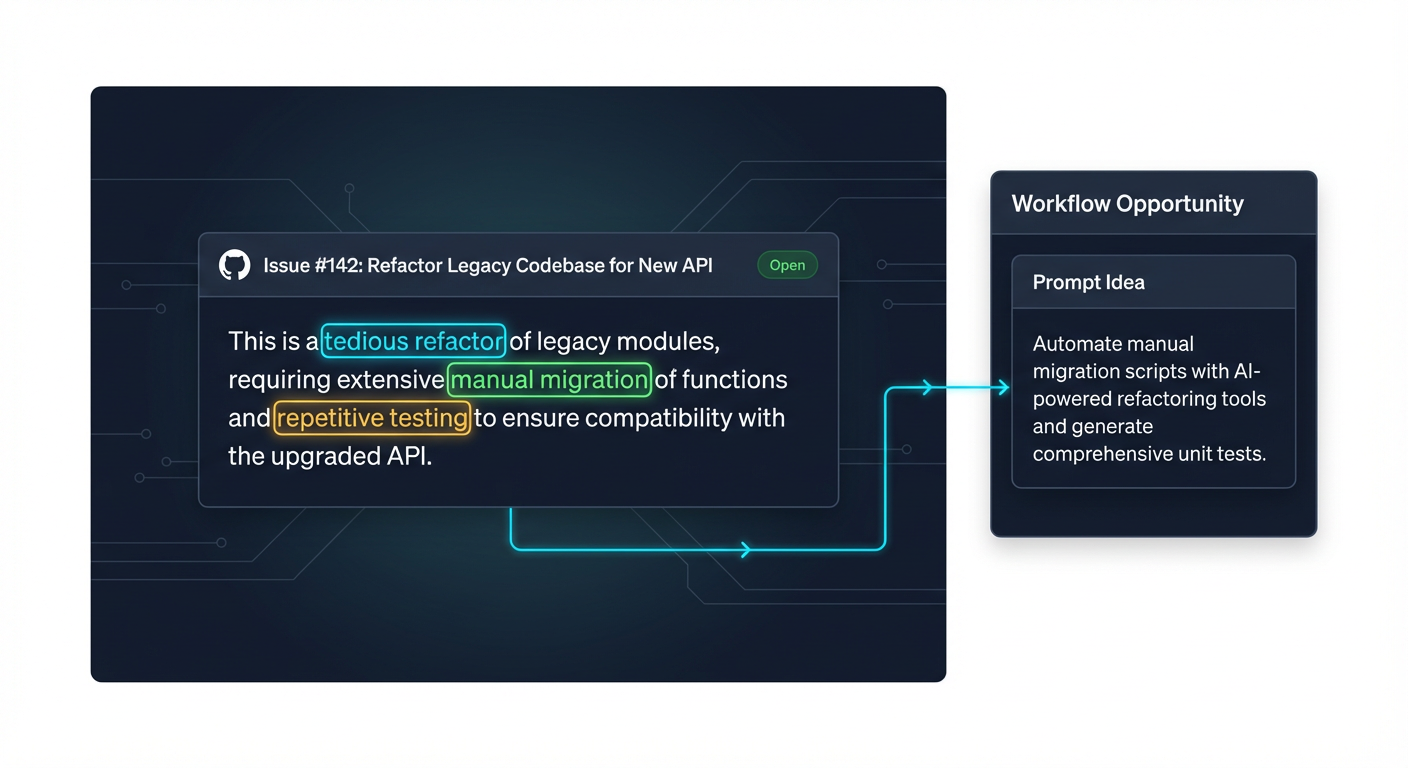

The GitHub "Friction Mining" Protocol

Your target buyers leave a digital paper trail of their frustrations every single day. GitHub issues, discussion boards, and StackOverflow unanswered questions are not just support tickets; they are market demand signals.

Instead of brainstorming ideas, you will mine open-source repositories for "Configuration Hell" and "Repetitive Refactoring"--the two areas where Cursor AI rules shine brightest.

The Search Query Strategy

You are looking for libraries or frameworks that are popular but notoriously difficult to configure, migrate, or test. Use these search patterns in GitHub Issues:

"how do I configure" is:issue is:open sort:comments"migration guide" is:issue label:documentation"boilerplate" OR "starter" sort:stars"best practice" language:typescript

Pro Tip: Look specifically for "v2 to v3" migration issues. Major version upgrades often break tailored workflows. A

.cursorrulesfile that auto-detects deprecated syntax and suggests the exact v3 replacement is a product developers will pay for instantly to save 20 hours of manual refactoring.

Analyzing the Signal

Once you find a cluster of complaints, categorize them to see if they fit a paid prompt product model:

| Signal Type | Description | Commercial Viability |

|---|---|---|

| "It's Broken" | Bug reports or core logic errors. | Low. This requires a patch, not a prompt. |

| "It's Tedious" | Writing boilerplate, setting up strict TS config, writing unit tests for edge cases. | High. AI excels here. A rule set that enforces strict boilerplate is high-value. |

| "It's Complex" | Configuring AWS CDK, Webpack, or highly specific architectural patterns (e.g., DDD). | Very High. Senior devs pay to offload mental complexity. |

| "It's a One-off" | "How do I install this?" | Low. They do it once and forget it. |

The Deliverable: Identify 3 specific friction points (e.g., "Setting up Shadcn UI with custom theme tokens takes too long manually") that you can solve via a .cursorrules file.

The 15-Minute Extraction Interview

You do not need an audience to validate demand; you need 10-15 conversations. However, do not ask "Would you buy this?" People lie to be polite.

Instead, conduct "Workflow Forensic" interviews. Reach out to peers, colleagues, or discord members with a specific request: "I'm trying to automate a specific friction point in [Framework X] and want to see if your workflow matches mine."

The Extraction Script

Use these three questions to uncover the "Prompt Sequence" worth packaging:

- "Show me the last 3 files you had to refactor manually because the AI kept getting it wrong."

- What this reveals: The gap between generic LLM knowledge and specific architectural constraints. This is your

.cursorrulescontext.

- What this reveals: The gap between generic LLM knowledge and specific architectural constraints. This is your

- "What is the one task you procrastinate on because it requires opening 5 different documentation tabs?"

- What this reveals: High cognitive load tasks. If you can embed that documentation knowledge into a Cursor rule, you remove the context switching.

- "If you could pay $50 to never write a specific type of boilerplate code again, what would it be?"

- What this reveals: Pricing elasticity and specific pain intensity.

Key Insight: You are looking for repeatable complexity. If a developer says, "I hate writing Zod schemas that match my Prisma schema," that is a goldmine. It is a deterministic, rule-based task that LLMs struggle with unless primed with specific instructions.

The "Ghost Test": Validating Without Building

Once you have a hypothesis (e.g., "A Cursor Rule set for perfect Supabase Row Level Security policies"), you must validate willingness to pay before creating the full library.

We use the Ghost Test methodology. This involves offering the value proposition to a small segment of traffic to measure intent.

Step 1: The Minimum Viable Offer (MVO) Draft a simple notion page or a single tweet/Discord message that describes the outcome, not the product.

- Bad: "I'm making a Cursor Rules pack for Supabase."

- Good: "I'm building a private rule set that forces Cursor to write bulletproof Supabase RLS policies automatically. It prevents the 3 most common security leaks. Looking for 10 beta testers."

Step 2: The Friction Mechanism Do not just give it away. Add a friction step to prove intent.

- "Reply with 'Secure' and I'll DM you."

- "Join the waitlist here (email required)."

- "Pre-order for $5 (fully refundable if I don't launch)."

Step 3: The Go/No-Go Threshold Set your metrics before you launch the test to avoid confirmation bias.

- Traffic Source: Post in one relevant Discord channel (e.g., Supabase developers) and your own LinkedIn/Twitter (even if small).

- Success Metric: 10 qualified leads (people who raised their hand) or 3 pre-sales.

- Timebox: 48 hours.

If you don't hit the threshold, do not build. Go back to the GitHub mining phase. The market has spoken, and it saved you 4 weeks of development time.

From Signal to System

By the end of this phase, you should have:

- A verified problem derived from public "friction" data.

- Qualitative confirmation from 10+ developer interviews.

- A list of "hand-raisers" waiting for your solution.

You are no longer guessing. You are building into proven demand.

What You'll Build On:

In the upcoming sections, we will take this validated concept and turn it into a tangible asset.

- Section 3: We will architect the actual

.cursorrulesfile structure, focusing on modularity and context optimization.- Section 4: We will cover "Packaging as a Product"--how to wrap your simple text files into a premium digital good with documentation and versioning.

- Section 6: We will maximize revenue by creating tiers (Basic Rules vs. Enterprise Team Contexts).

The difference between a hobbyist sharing a prompt and a business owner selling a workflow is validation. You now have the validation. Next, we build the product.

This is a preview of the "Launch Your Paid Cursor AI Rules Business" course. The full curriculum includes detailed interview scripts, regex patterns for GitHub mining, and the exact Notion templates used to pre-sell $5k of rules before launch.

Course Details

- Sections8 sections

- Price$9.99